Introduction

Q-learning is a popular reinforcement learning algorithm, used for solving Markov Decision Processes (MDP). In some cases, Q-learning doesn’t work well and takes a long time to converge, due to an issue known as the optimizer’s curse or maximization bias. In this post, we’ll take a look at the problem as well as a proposed solution. What follows is a brief recapitulation of MDP’s and Q-learning, followed by a deep dive into Double Q learning, the proposed solution to the problem.

Recap of Q learning

A Markov chain consists of states connected through transition probabilities, which determine the likelihood of moving from one state to another. This probability depends only on the current state, not on the sequence of events that preceded it. Some states are accessible only from specific other states, forming a directed network.

A Markov Decision Process (MDP) extends the concept of a Markov chain by incorporating decisions. It substitutes transition probabilities with actions that represent available choices. Each state in an MDP is linked with a reward, indicating the value of reaching that state. The distinct feature of MDPs is the decision-making aspect, where an agent selects actions to transition between states and accumulate rewards. The goal in an MDP is to find an optimal policy, which is a set of rules defining the best action to take in each state to maximize rewards.

A trajectory through an MDP is represented using the notation: starting at state S, an action A is chosen from the available options in state S. This leads to a transition to state S' with probability P, and a reward R is received. The tuple (S, A, P, R) describes this process, where P is defined as Pr(S' | S, A). This sequence repeats at each step until a terminal state is reached, outlining the full trajectory:

S0, A0, R1, S1, A1, R2, S2, A2, R3, ...

The Q(action, value) function under a policy π is formally defined as:

Qπ(s, a) = E[G | s, a, π]

where E[G | s, a, π] represents the expected total (discounted) reward given that we start in state s, take action a, and then follow policy π for all subsequent decisions. This expectation accounts for the sum of rewards received, starting from s and a, under the guidance of policy π.

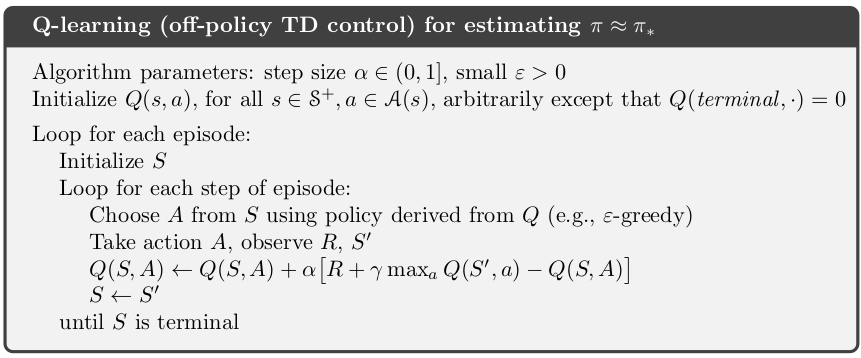

In Q-learning, the objective is to approximate the optimal Q function, which represents the best action values under an optimal policy, regardless of the initial policy used to generate training data. The policy generating our training data decides actions, which might not be optimal. Our aim is to iteratively refine our Q function based on these examples. The algorithm is as follows:

Q-learning algorithm (Sutton and Barto)

For a full discussion of Q-learning I recommend the following 2 sources:

- Hugging Face course on Q-learning. This is a nice quick overview.

- For a full treatment see the RL book by Sutton and Barto, which is available free here.

Dissection of the problem

A common issue with Q-learning involves how it handles variance in rewards. Consider an MDP where we start in state A with the options to move to B or directly to a terminal state T, neither transition offering any reward. From B, we can transition to several secondary states, C1, C2, ..., Cn, each associated with a reward from a normal distribution with a negative mean (e.g., -0.1) and a variance (e.g., 1). Transitioning from any Cn to T yields no reward. Ideally, the optimal strategy is to move from A to T, avoiding negative rewards in the C states. However, the stochastic nature of rewards means a visit to any C state might yield a positive reward. The likelihood of receiving a positive reward increases with the number of C states.

This variance introduces a challenge in Q-learning. The algorithm estimates the Q-value for transitioning from A to B based on the maximum reward obtainable from moving to any C state. Given the rewards are drawn from a distribution, it’s probable to encounter a positive reward in one of the C states during exploration. Consequently, the Q-function may overestimate the value of moving from A to B. Essentially, the “max” operation in the update rule can cause a single overoptimistic estimate to skew the Q-values, leading to incorrect policy decisions.

A more in-depth explanation can be found in the paper The Optimizer’s Curse: Skepticism and Postdecision Surprise in Decision Analysis (pdf) by Smith and Winkler.

Solution

A solution to this problem, Double Q-learning, was proposed by Hasselt (pdf). Let’s view our problem through a slightly different lens. The issue arises because we are using the same samples of C twice. Once to estimate the value of taking an action; Q(B, move to Ci). Secondly when performing the maximizing operation to determine which Ci is best to move to from state b; maxi Q(B, Ci). If we instead use 2 independent estimates, Q1 and Q2, we alleviate this problem. Q1 might overestimate the value of moving to a particular state Ci, but it’s very unlikely that Q2 also estimates moving to Ci to be the best action to take from B.

This sounds confusing, so let’s walk through the original Q-learning update again. After removing the discount (unimportant for our purposes) we are left with:

Q(s, a) = Q(s, a) + α * (reward + max a Q(s’,a) - Q(s,a))

Conceptually we are doing the following:

# the old/current estimate of taking action a in state s |

What’s important to realize is we are making use of the same Q function to get our estimates twice. Once for Q(s’,a) to get the value of the new state action pair, and again when performing max a on Q(s’,a) to decide what value of a to use.

The double Q-learning solution to our problem says we should use two independent estimates of the state-action values. Our update is now as follows:

# the old/current estimate of taking action a in state s |

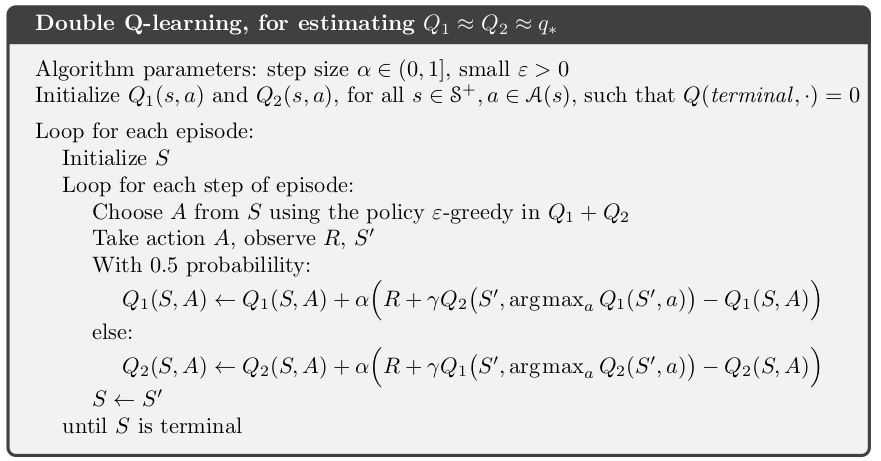

The full double Q-learning algorithm is as follows:

Double Q-learning (Sutton and Barto)

Since Q1 is updated on different samples than Q2, they are not subject to the maximization bias. The algorithm does require more memory to store two Q functions. The computational cost stays the same.

Code Walkthrough

The first time I went through this, it was a bit of a head-scratcher, so let’s walk through the code in python to make it more concrete. We will be using the exact same example MDP as above. We will run both Q-learning and Double Q-learning and compare their results. The full code is available here.

Create a Markov process. Note that the values of states C are drawn from N(-0.1, 1).

def get_reward(state: str) -> float: |

Our Q functions are simply dictionaries.

q: Dict[Tuple[str, int], float] = {} |

Now define a function to do the Q-learning update. max_a uses the provided q to find the best next value.

def q_update( |

The Double Q-learning update. argmax_a finds the best next action, using the provided Q function.

def double_q_update( |

At this point, we simulate both.

def simulate( |

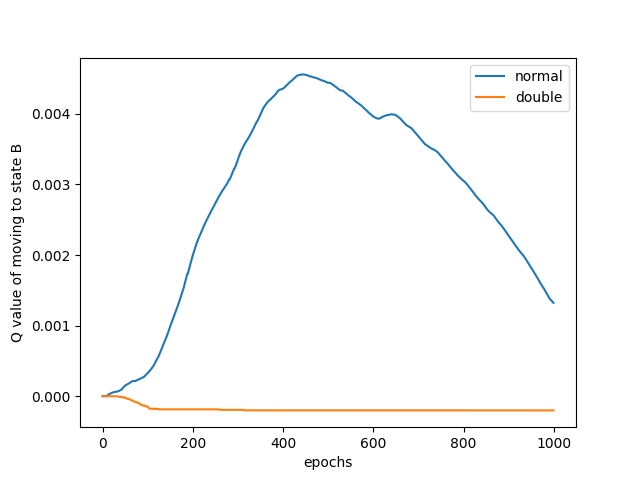

Now we can plot the results. They will differ every time, but mostly look something like this.

My results

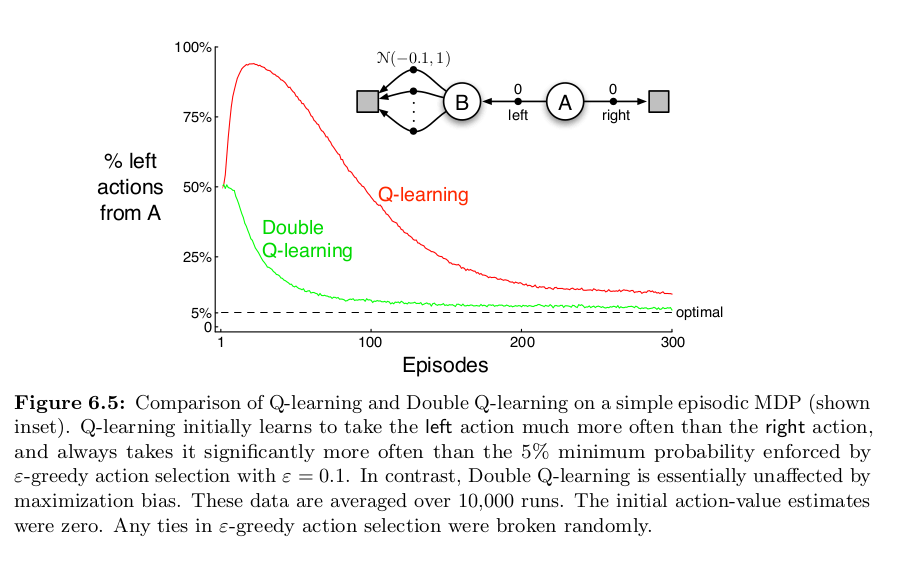

We can clearly see how Q-learning has trouble converging on the correct result. I was so excited when I got this result, because it mirrors very closely with that of Sutton and Barto!

Sutton's results (Sutton and Barto)

Conclusion

I went into this deep dive because I had trouble understanding this myself. I’m still not entirely sure I understand it, but writing out the code certainly helps. Sutton and Barto’s book on RL really is a must. Other useful resources are these series on YouTube:

And of course, Andrej Karpathy’s blog post.